Maximize Security with Power Platforms Copilot Risk Tool

Unlock Power Platforms Potential: Mastering Copilot Risk Assessments

Key insights

Key Insights on Copilot Risk Assessment in Power Platform

Risk assessments are crucial even though Microsoft ensures a responsible and secure AI experience with Copilot. It helps organizations and developers maximize their experience with Copilot in Power Platform, highlighting the importance of understanding the technology to prevent issues.

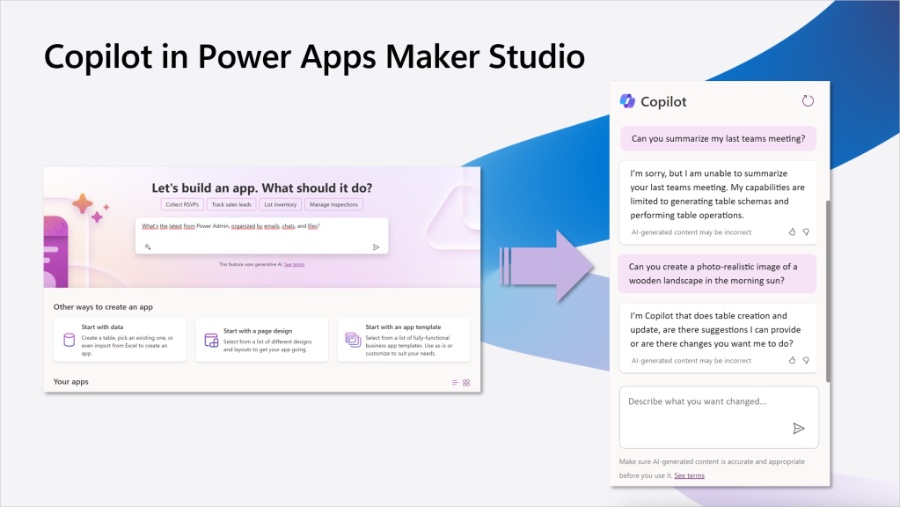

Power Apps Maker Studio is used to showcase the Copilot experience, addressing common concerns such as data security in apps. The example demonstrates Copilot generating an app and interacting during the design process, focusing on table schemas and operations.

Copilot responds to Power Apps related prompts only, showing limitations when asked to perform tasks outside of app design, such as summarizing emails or using DALL-E, indicating a focus on relevant data for app development.

Unexpected responses raise questions about data usage and security. A surprising answer involving the “VanArsdel Heating and Air Conditioning Canvas App” leads to concerns over Copilot using external data and the sources it draws from for its responses.

Environment-specific results hint at deep integration with documentation, suggesting Copilot may use Power Apps Docs and possibly Bing Search for information, emphasizing the need for risk assessment to understand data use dynamics.

Understanding the Importance of Risk Assessment for Copilot in Power Platform

Integrating AI technologies like Copilot into enterprise solutions like the Power Platform raises inevitable questions and concerns about security, data privacy, and overall effectiveness. Conducting a thorough risk assessment becomes not just beneficial but essential for businesses seeking to leverage these advanced capabilities responsibly. It ensures that organizations are aware of how these AI services interact with sensitive data, comply with existing data protection regulations, and align with corporate security policies.

Moreover, understanding the limitations and operational boundaries of Copilot within the context of app development in Power Apps Maker Studio illustrates the current state of AI within enterprise environments. It points out the necessity for clear guidelines on the AI's ability to access and use organizational data and external information. This becomes particularly crucial in scenarios where unexpected AI responses could reveal underlying data management or privacy considerations.

The need for ongoing vigilance and assessment is further underscored by instances where AI, like Copilot, interacts or potentially shares data across unexpected avenues. Insights into Copilot's interaction with app design processes, its reliance on specific data sources, and its limitations within the Power Platform ecosystem are vital. These details enable organizations to better safeguard their digital environments while benefiting from the efficiency and innovation that AI technologies like Copilot offer.

In summary, embarking on a risk assessment for Copilot within the Power Platform is a proactive step toward ensuring that this advanced AI technology not only enhances operational efficiency and creativity but does so in a manner that is secure, responsible, and aligned with the organizational values and guidelines.After a hiatus, Carsten Groth revives a series focused on preparing for risk assessments of Microsoft Copilot within the Power Platform, despite excluding details on Microsoft 365 Copilot experience. He encourages understanding the technology to enhance security and user experiences. This series aims to highlight the importance of a thorough risk assessment despite Copilot being a default, secure AI feature in Microsoft products.

Carsten Groth shares insights from utilizing Power Apps Maker Studio, centered on the security of company data when using Copilot for app development. Comparing Copilot in Power Platform to its use in Microsoft 365, he stresses the significance of secure data sourcing, specifically referencing Microsoft Graph. This comparison seeks to underscore the differences and similarities in using Microsoft Copilot across different platforms.

Read the full article Power Platform | Copilot Risk Assessment

In demonstrating an application's creation within Power Apps Maker Studio with Copilot's aid, Groth highlights the AI's interaction and its limitations. Despite initial assistance in app development tasks, Copilot's capabilities seem confined strictly to Power Apps-related functions, neglecting unrelated requests like summarizing emails or employing external services like DALL-E.

Exploring Copilot's responses further, an unexpected reference to "VanArsdel Heating and Air Conditioning Canvas App" during a regional test prompts questions about Copilot's data sources and potential external data use. This discovery leads to a broader investigation into the AI's workings, emphasizing the need for a comprehensive risk assessment in understanding and controlling data usage within Copilot-assisted applications.

The search for VanArsdel in the blogger's environment yields no direct results but uncovers related documentation upon expanding the search to include web results, raising questions about the AI's learning sources. The observation that Copilot may utilize content from Power Apps Docs suggests the intricate mechanics behind data utilization and the necessity of a risk assessment to ensure organizational data security and privacy.

Concluding with a teaser about continued exploration into Copilot's functionality and guidance on preparing effective risk assessment documentation, Carsten Groth aims to expedite customer readiness for secure, responsible AI integration within their organizational operations. This entry into the series encapsulates an initial foray into understanding and mitigating risks associated with implementing AI solutions like Microsoft Copilot in the Power Platform.

People also ask

What is the risk assessment in power platform?

This assessment pinpoints potential areas of risk within your current Power Platform deployment. It aims to devise strategies for establishing governance protocols that not only mitigate these risks but also enhance your business operations. Leveraging a comprehensive governance model can boost the Power Platform's utility while minimizing risks.

What does Copilot do?

Copilot encompasses artificial intelligence capabilities, including generative AI for both text and images, as well as analytical tools for text and data. Its primary goal is to expedite the processes of searching, composing, and brainstorming, enabling more efficient completion of tasks across the applications you use daily.

How many Microsoft copilots are there?

During the Microsoft Ignite event, the company introduced new elements to its Copilot ecosystem, specifically Copilot for Service and Copilot for Sales. These additions harness AI technology to streamline and optimize enterprise operations, targeting service and sales sectors respectively.

Is Microsoft Copilot free?

Copilot is designed to enhance productivity by aiding users in locating precise information, crafting original content, and accelerating task completion. To access Copilot's complimentary offerings, one can visit copilot.microsoft.com.

Keywords

Power Platform Copilot, Copilot Risk Assessment, Power Platform AI, Microsoft Power Platform, AI Risk Analysis, Power Platform Compliance, Power Platform Security, AI Governance Power Platform, Copilot Security Assessment, Power Platform Automation