Agent Readiness Framework: Get AI-Ready

Software Development Redmond, Washington

Microsoft expert unveils Agent Readiness Framework to design scale and govern AI agents with Copilot Studio and Azure

Key insights

- Agent Readiness Framework — A five‑pillar model built from research with 500 enterprise decision-makers to help organizations assess, plan, and scale AI agents.

It shows what to measure, where gaps appear, and how to move from experiments to production with confidence. - Five pillars — Core areas to address: AI strategy, business process mapping, technology and data, organizational readiness, and security and governance.

Each pillar guides specific practices like KPIs, workflow ownership, data access, training, and safeguards. - Readiness levels — Organizations map to profiles such as Achievers, Visionaries, Operators, and Discoverers to benchmark progress.

Use assessments to identify gaps and create a clear roadmap for scaling agents. - Practical actions — Perform process mapping, assign data ownership, set refresh cadences, and define integration points for agents.

Adopt tools for building and managing agents, and train teams in prompt engineering and agent optimization. - Measured benefits — Higher readiness teams deploy agents about 2.5x faster, enable autonomous workflows, cut handoffs, and speed issue resolution.

Agents deliver repeatable value when backed by solid data and governance. - Governance and culture — Establish model oversight, bias testing, audit trails, and clear policies for agent behavior.

Pair responsible AI practices with executive sponsorship and training to build trust and avoid sprawl.

Video at a Glance: Microsoft Frames the Case for Agents

In a recent YouTube presentation, Microsoft introduces the Agent Readiness Framework, a practical model designed to help enterprises assess and prepare for AI agents. The presenter summarizes findings from interviews and surveys of 500 decision-makers and outlines a five‑pillar approach for designing, deploying, and scaling agentic systems. The video aims to give leaders a clear way to benchmark readiness and build roadmaps for production use, while integrating real-world lessons from early adopters.

Explaining the Five Pillars

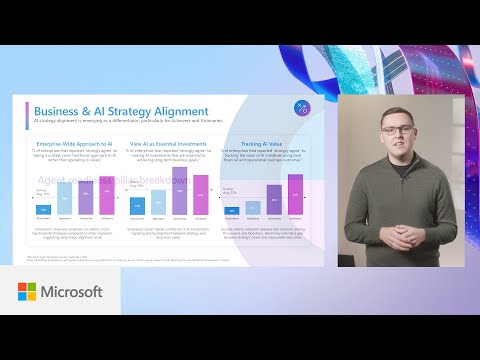

The framework groups essential activities into five pillars: business and AI strategy, business process mapping, technology and data, organizational readiness and culture, and security and governance. Each pillar targets a different dimension of readiness, from aligning executive goals to documenting workflows and establishing guardrails. By separating these elements, the framework helps organizations see where they excel and where they must invest to move from pilots to scaled operations.

Moreover, the video emphasizes that organizations fall into readiness categories such as Achievers, Visionaries, Operators, and Discoverers, and that those with higher readiness scale faster. The framework therefore serves both as a diagnostic and a planning tool, allowing teams to prioritize improvements that unlock the most value. As a result, leaders can balance near-term wins against long-term foundations when plotting their AI roadmaps.

Technology and Data Foundations

Microsoft stresses that robust data and technology foundations are critical to successful agent deployment. Specifically, the video highlights the need for reliable data ownership, refresh cadences, and unified access layers; it also references tools like Microsoft Fabric as examples of unifying platforms. Reliable infrastructure reduces brittle integrations and creates the conditions for agents to access accurate, timely information.

However, the technology tradeoffs are clear: organizations must weigh centralization against agility and proprietary models against open or third-party options like Anthropic and other providers. Centralized platforms can simplify governance and monitoring, but they may slow innovation for business units that need fast experiments. Conversely, distributed model access accelerates experimentation but complicates security, cost control, and traceability.

Organizational Readiness and Governance

The video also addresses human and policy dimensions that often determine project success or failure. It points to upskilling needs such as prompt engineering and agent optimization while emphasizing leadership communication and change management to build trust. In addition, governance practices—model oversight, audit trails, and bias testing—are portrayed as essential to keep agents accountable and compliant.

Furthermore, Microsoft highlights solutions like Entra Agent ID and Agent 365 as examples of tooling that make agents behave like well-managed non-human employees. These tools aim to provide identity, tracing, and role-based controls so operations teams can audit actions and owners can assign responsibility. Nevertheless, adding these safeguards increases implementation complexity and operational overhead, creating another balancing act between safety and speed.

Tradeoffs and Key Challenges

The video does not shy away from the difficult tradeoffs organizations face when adopting agents. For instance, faster scaling often requires higher initial investment in data quality and governance, and leaders must decide whether to prioritize rapid prototyping or foundational work that reduces long-term risk. Consequently, organizations that underinvest in foundations may realize early wins but face costly technical debt and governance gaps later.

Moreover, complexity in business process mapping and integration can slow deployment even when models perform well in isolation. Mapping ownership, KPIs, and integration points takes time, and cross-functional coordination remains a frequent bottleneck. Finally, organizations must confront model reliability issues, bias mitigation, and monitoring at scale—challenges that demand ongoing attention rather than one-off fixes.

What Organizations Can Take Away

In summary, the YouTube video presents the Agent Readiness Framework as a pragmatic path from experimentation to enterprise-grade agent deployment. It combines a clear set of pillars with practical examples and emphasizes assessment, benchmarking, and iterative improvement. Organizations can use this approach to prioritize investments that reduce risk and accelerate value realization.

Ultimately, the framework invites leaders to balance speed, control, and cost. By aligning strategy, strengthening data foundations, investing in skills, and embedding governance, teams can scale responsibly while enabling innovation. As interest in agentic AI grows, the video offers a useful playbook for organizations seeking to adopt agents with confidence and clarity.

Keywords

Agent readiness framework, prepare organization for AI agents, enterprise AI agent implementation, AI agent governance best practices, agent readiness assessment checklist, organizational AI adoption strategy, AI agent security and compliance, building AI agent teams