- All of Microsoft

Comprehensive Guide to Microsoft Power Platform Dataflows

Enhance data-driven decision making with Power Platform Dataflows - Schedule, extract, transform and load data easily from various sources.

Power Platform Dataflows, an attractive feature in Power BI and Azure Synapse, enable users to extract, transform, and load data (ETL) from various sources into a centralized data hub like Dataverse or Azure Data Lake. This plays a significant role in strengthening business intelligence and decision-making strategies by providing current and enriched data

Being highly adaptable, Power Platform Dataflows can easily link to numerous data sources, including widely-used databases, SaaS applications, and flat files. This contributes to data amalgamation and simplification of its usage and analysis. Besides data extraction, these dataflows also assist users with data transformation, another crucial element of their multi-faceted feature set.

Ensuring regular data updates, Dataflows are equipped to frequently run and keep the data in the destination source current. Therefore, data-dependent reports and dashboards are always up-to-date, enabling the users to base their decisions on the most recent data. More information can be found here to know about Power BI and Azure Synapse processes

Understanding Power platform Dataflows

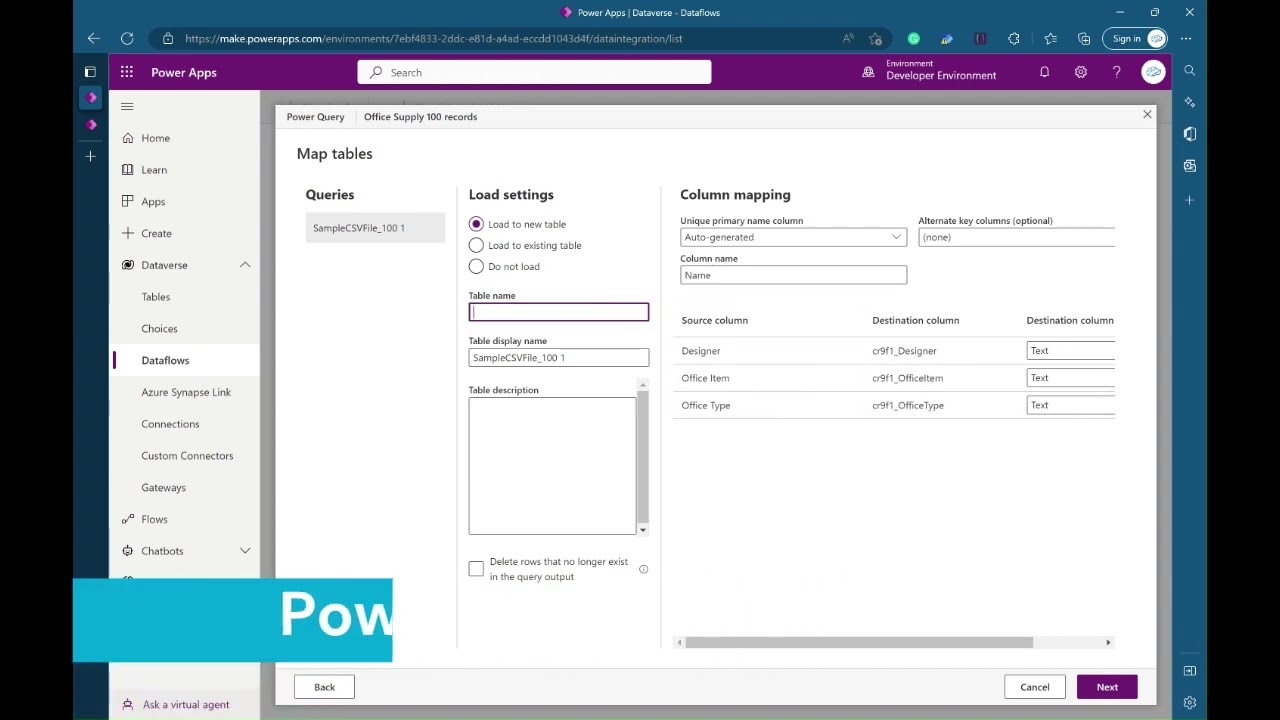

Frederik Bisback demonstrates the Power Platform Dataflows limitations through a CSV file import test, which highlights the optimal performance for uploads up to 5000 records and a significant rise in upload time post the 5000 record mark.

Listed below are some tips for optimizing the performance of your Dataflows:

- Implement incremental refresh for new or updated records only

- Select the correct data type for each column

- Limit the records to 5000 for optimum performance.

The proficiency of your Dataflows should be in regular check, and necessary adjustments need to be made to ensure their efficient operation.

Dataflows can competently import data in different scenarios like creating a new table to import data or inserting data into an existing table. Contingent upon how you configure your dataflow, it caters to creating new records or updating existing ones in a table by using a key column identified by the user. The Dataflow will create new records if the key is not selected.

Ending with an example, the author guides you through a data import in Dataverse associated with a lookup table while notifying that the data from the source must be present in the lookup table to avoid a failure in the upload process.

In conclusion, Power Platform Dataflows present a powerful and versatile approach for users to Extract, Transform, and Load data, simplify data manipulation procedures, and procure data-driven insights from different systems for better business outcomes.

Read the full article Power Platform Dataflows

Learn about Power Platform Dataflows

Power Platform Dataflows are a vital feature used for Extracting, Transforming, and Loading (ETL) data across different sources to centralize data storage. Centralized data storage sources primarily include Dataverse and Azure Data Lake. This key feature helps utilize BI tools and analytics such as Azure Synapse and Power BI for insightful and data-driven decisions.

The Power Platform Dataflows feature provides numerous benefits including easy connection to multiple data sources such as SaaS applications, widely used databases, and flat files. Users can easily extract data from numerous sources and accumulate them to a single location, this helps in easy merging, utilization, and data analysis from various systems.

The feature not only helps in extracting data but also provides users the advantage of transforming the data while being loaded into the Data Lake. This includes data normalization and cleaning or transformation of data that can be performed using an easily manageable drag-and-drop interface. This makes data manipulation easy for users with minimal to no coding experience.

Additonally, the Power Platform Dataflows can be scheduled to run regularly which constantly updates the data at the destination source. This assists users in creating dashboards and reports that are always updated with the most recent data, helping them make well-informed decisions.

Power Platform Dataflows performance depends on various aspects including the complexity of the data being processed and the number of transformations being applied. Looking at the results provided in the graph, the performance seems acceptable for uploads until there is 5000 records. Post exceeding 5000 records, the upload times seem to rise exponentially. Some interesting tips to enhance the performance of Dataflows includes:

- Incremental Refresh: This feature allows loading of only new/modified records into the data store rather than reprocessing the entire dataset each time. By doing this, the time and resources required to refresh the data is significantly reduced.

- Appropriate Data Type: Usage of correct data type for each column in the Dataflow which can enhance performance. For example, for columns having whole numbers, “whole number” data type will be faster than the “decimal number” data type.

- For optimum performance, the number of records should be limited to 5000.

Regularly monitoring the performance of the Dataflow is necessary and making required adjustments ensures they run efficiently.

Additionally, there are several example of Dataflows that can be studied to understand the functionality better. For example, the first sample demonstrates the construction and import of a simple CSV file to a new table. The next sample involves import of a simple CSV file to an existing table in Dataverse. While the final example demonstrates the import of data in Dataverse related to a Lookup table via Dataflows.

Lastly, Power Platform Dataflows provide a reliable and flexible approach for users to Extract, Transform, and Load (ETL) data across various sources to Azure Data Lake or Dataverse, which enables them to seamlessly merge and analyse data from various systems, and make data-driven decisions. Such features are beneficial to data scientists, developers and business analysts, as it can assist them in unlocking the potential of data to drive optimal business outcomes.

More links on about Power Platform Dataflows

- An overview of dataflows across Microsoft Power Platform ...

- Jul 27, 2023 — Dataflows are authored by using Power Query, a unified data connectivity and preparation experience already featured in many Microsoft products, ...

- Create and use dataflows in Microsoft Power Platform

- Jun 14, 2023 — Using dataflows with Microsoft Power Platform makes data preparation easier, and lets you reuse your data preparation work in subsequent ...

Keywords

Power Platform Dataflows, Dataflows in Power Platform, Microsoft Power Platform, Power Platform Data Integration, Dataflow Creation, Power Platform Dataflow Tutorial, Dataflow Management, Power Platform Tools, Power App Dataflows, Utilizing Power Platform Dataflows