- All of Microsoft

Microsoft Copilot Studio: Chess Coach

Microsoft Copilot Studio chess puzzle coach explores generative and classic Copilot modes tuning for rules and streaming

Key insights

- Chess Puzzle Coach is an experimental side project by Audrie built inside Microsoft Copilot Studio as test v1.

It demonstrates a simple, interactive chess-puzzle assistant rather than a finished product. - The agent aims to provide puzzle solving and coaching help for chess puzzles, such as suggesting moves, explaining tactics, and discussing lines.

It can both generate and analyze puzzles in chat-style interactions. - Current limitations include overly chatty responses and gaps in chess rule knowledge, for example missing threefold repetition detection.

Audrie plans to tighten response control and add missing rule awareness to improve reliability. - Technically, Copilot Studio connects enterprise services and uses OpenAI models, letting creators build custom assistants and workflow automations.

The platform supports multi-agent orchestration and integrations with Microsoft 365 and Power Platform features. - The project highlights Copilot Studio’s power to create domain-specific agents for use cases like interactive learning, training, or casual gaming within trusted apps.

It shows how small, focused copilots can extend productivity platforms into new areas. - Public details remain sparse as of August 2025, so this is mainly a proof-of-concept rather than a public release (limited public information).

Next steps include refining responses, testing generative vs. classic modes, and monitoring official Copilot Studio updates and previews for changes.

Quick summary of the video

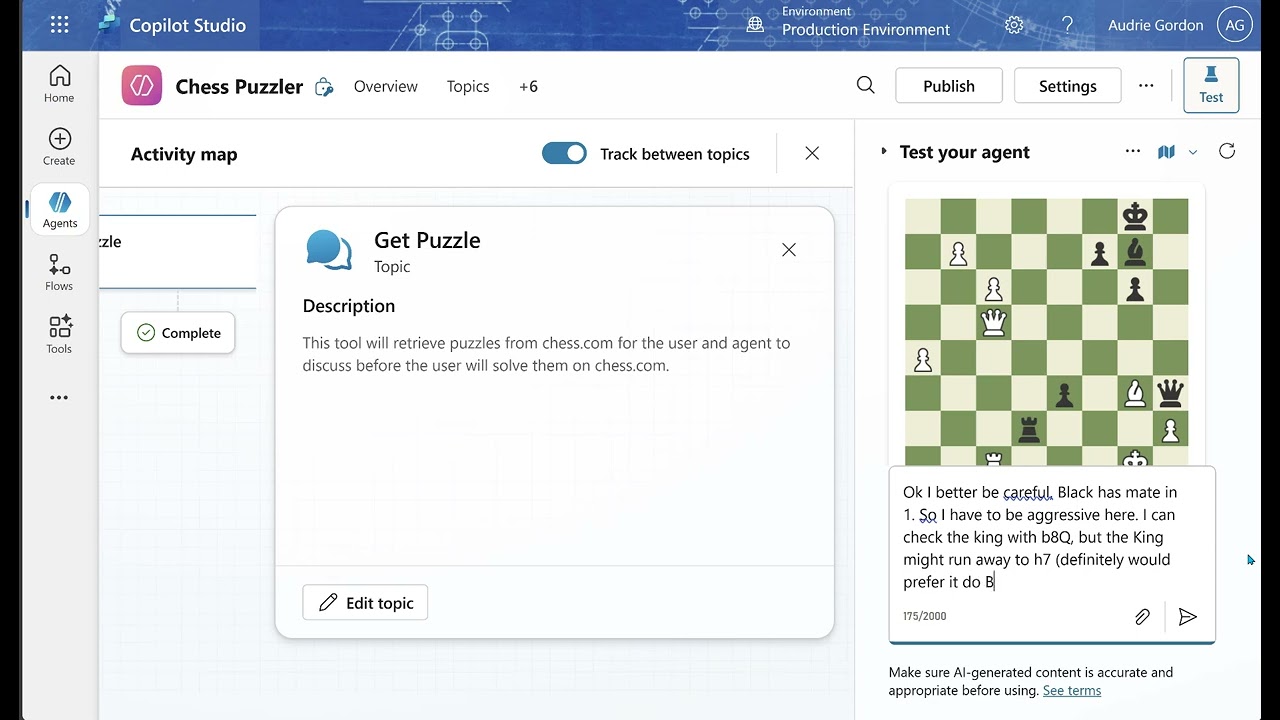

In a brief YouTube demo, creator Audrie Gordon showcases an early prototype titled Chess Puzzle Coach built inside Microsoft Copilot Studio. The video presents a hands-on test labeled Test v1, where the agent interacts with a random puzzle from chess.com and attempts to explain moves and strategy. Throughout the clip, the author notes the tool feels overly talkative and mentions plans to refine its verbosity to avoid streaming interruptions.

The creator also flags factual gaps, for example the assistant missing chess rules such as threefold repetition. The demo serves more as an exploratory prototype than a finished product, and the author invites viewers to collaborate on further development. Consequently, the video reads as both a status update and a call for community input rather than a formal launch announcement.

What the demo illustrates about Copilot Studio

The clip highlights how Copilot Studio lets developers compose focused assistants that handle domain tasks, in this case chess puzzles. By wiring natural language models to a puzzle source, the demo shows a workflow where an AI can retrieve a position, propose candidate moves, and discuss ideas with the user. This approach underlines the platform’s intent to make specialized, context-aware agents accessible to creators outside large engineering teams.

At the same time, the video underscores how early experiments rely on tweaking both model behavior and integration settings to get acceptable results. The author alternates between a “generative” mode and a “classic” mode to test which produces clearer, more reliable responses. Therefore, the demo provides a practical look at how creators must balance flexibility and control when building Copilot agents.

Key findings from the Chess Puzzle Coach test

First, the assistant’s tendency to be verbose created streaming hiccups and a less smooth user experience, which the author plans to address by controlling response length and pacing. Second, the agent showed knowledge gaps on certain chess rules, suggesting the need for targeted knowledge injections or rule-checking logic. As a result, the prototype illustrates that natural language models can present plausible guidance but still miss precise, rule-bound edge cases.

Third, toggling between model styles revealed tradeoffs: the generative style produced richer, more exploratory commentary while the classic mode tended to be more conservative and predictable. Consequently, developers must decide whether they value expressive coaching or strict correctness, and they may need to combine modes or layer validation checks to achieve both.

Tradeoffs and technical challenges

Balancing conversational depth and factual accuracy is central to this project. If the agent speaks more, it can be a better tutor, but that increases token usage and adds latency, which in turn risks streaming issues. Conversely, limiting output reduces streaming load and may make the assistant snappier, yet it might fail to offer helpful explanations for learners who need detailed reasoning.

Moreover, encoding chess rules reliably demands explicit checks beyond raw model responses. The developer might integrate a rule engine or a chess library to validate moves and detect conditions like threefold repetition or stalemate. However, adding such systems increases complexity and testing overhead, which pressure project timelines and governance considerations when such agents move from hobby demos to production tools.

Implications and next steps

Although still informal, the video signals broader possibilities for specialized Copilot agents in education and casual learning. If refined, a chess puzzle coach could become a lightweight tutor in game sites or study tools, helping players practice tactics with guided reasoning. Yet, commercial or enterprise adoption would require stronger accuracy, logging, and governance measures to meet user trust and compliance standards.

The author’s invitation to collaborate suggests an open, iterative roadmap where community feedback helps prioritize improvements such as rule coverage, response tuning, and multi-mode orchestration. For readers following AI agent development, the demo is a useful snapshot of practical challenges and choices—showing both the creative potential of Copilot Studio and the pragmatic work needed to make assistants reliable.

Keywords

Chess Puzzle Coach, Microsoft Copilot Studio, Copilot chess coach, AI chess trainer, Chess puzzle AI, Copilot Studio tutorial, Chess training tool, Chess puzzle generator